CrowdStrike Outage: How Better DevOps Could Have Prevented a Global Incident

The recent global outage of CrowdStrike's Falcon platform on July 19, 2024, serves as a stark reminder of the critical importance of robust DevOps practices in today's fast-paced, interconnected tech landscape. As a DevOps professional, I can't help but see this incident as a clear example of how proper implementation of DevOps principles could have prevented or significantly mitigated the impact of such an outage.

The Incident

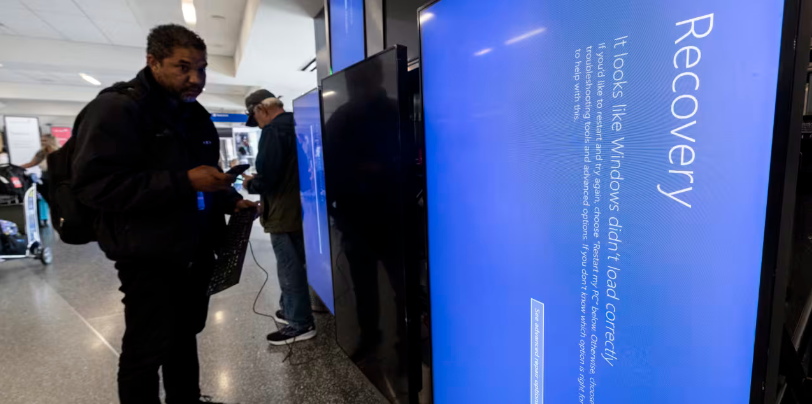

CrowdStrike, a leader in cloud-delivered endpoint and workload protection, experienced a widespread outage due to a problematic update to their Falcon sensor for Windows systems. The update, intended to enhance telemetry gathering capabilities, contained a logic error that caused affected systems to crash, resulting in a blue screen of death (BSOD) for millions of devices worldwide.

DevOps Shortcomings

Analyzing the incident from a DevOps perspective, several key areas stand out where improved practices could have made a difference:

1. Inadequate Testing and Validation

The faulty update passed through CrowdStrike's Content Validator software due to a bug in the validator itself. This highlights a critical weakness in their testing and validation processes. A robust DevOps pipeline should include:- Comprehensive unit testing

- Integration testing

- System-level testing

- Automated regression testing

- Performance testing under various conditions

2. Lack of Staged Rollouts

One of the most glaring oversights was the absence of a staged rollout strategy. DevOps best practices advocate for:- Canary deployments

- Blue-green deployments

- Gradual rollouts with monitoring

3. Insufficient Monitoring and Alerting

The rapid spread of the issue suggests that CrowdStrike's monitoring and alerting systems were not robust enough to quickly detect and respond to the anomaly. A well-implemented DevOps observability stack should include:- Real-time performance monitoring

- Error rate tracking

- User experience monitoring

- Automated alerting systems

4. Inadequate Rollback Mechanisms

The time taken to identify and fix the issue (78 minutes) indicates that CrowdStrike may not have had efficient rollback mechanisms in place. DevOps best practices include:- Automated rollback procedures

- Version control for all configurations

- Immutable infrastructure principles

5. Lack of Chaos Engineering

Implementing chaos engineering practices could have helped CrowdStrike identify potential failure modes in their systems before they occurred in production. This includes:- Simulating various failure scenarios

- Testing system resilience regularly

- Identifying and addressing single points of failure

6. Moving Forward: DevOps Improvements

Implementing chaos engineering practices could have helped CrowdStrike identify potential failure modes in their systems before they occurred in production. This includes:- Simulating various failure scenarios

- Testing system resilience regularly

- Identifying and addressing single points of failure

7. Post-Incident Reviews: Conduct thorough post-incident reviews (like the one CrowdStrike has committed to) and ensure learnings are integrated into future processes.

Conclusion

The CrowdStrike outage serves as a valuable lesson for the entire tech industry. It underscores the critical importance of robust DevOps practices in maintaining the reliability and security of systems that millions depend on daily. By embracing these principles and continuously improving their processes, organizations can significantly reduce the risk of such widespread outages and enhance their ability to respond quickly and effectively when issues do arise.

As we move forward in an increasingly interconnected digital world, the adoption of mature DevOps practices is not just a best practice – it's a necessity for ensuring the stability, security, and reliability of our critical systems.